- by Jimmy Fisher

- Nov 02, 2024

U.S. Mortality Data (NCHS)

- By Jimmy Fisher

- Nov 16, 2024

- in Coding Projects

Turning our attention to the darker bookend of human life, let's take a look at some public death data published by the U.S. National Center for Health Statistics (NCHS). We are going to go deep on this one, because only vastly simplified cuts of mortality data are accessible on CDC's Wonder platform.

As ancient alchemists put it, In sterquiliniis invenitur -- in filth it will be found; the greatest treasure is often in the darkest dung heap one does not wish to confront. Or, if you prefer a more sterile, intellectualized instantiation of this idea, the most interesting signals are to be found within the greatest noise.

In this post, you will learn how to download and prepare base, "noisy" NHCS data on U.S. mortality using the Python programming language, completing several layers of data preparation to arrive at a base file on which several analyses can be run.

There is no API for these base data, so let's download the base tables from HERE. At this moment, public U.S. mortality data are available from as far back as 1982, though cross-comparing data across years is often complicated by differences in data capture, encoding, and granularity. At this stage, I will constrain myself to data representing U.S. deaths in 2016 through the current-latest year, 2022.

Check out THIS article for a good primer on these mortality data.

Starting with 2016

I downloaded and unzipped the 2016 file and got a 1.3GB file named VS16MORT.DUSMCPUB, a flat text file with data encoded as fixed-width single strings. The encoding is provided in THIS documentation, and you will notice that there are positions in each record intentionally left blank, either called reserved positions or appended to the end of a variable (e.g., entity axis conditions & record axis positions).

A fixed width file is a plain text file where each column of data has a specific, consistent width within a string of characters. Since the width of each column remains constant, transforming this file into a dataframe required: 1) an array of variable names, and 2) an array of how many characters align to each variable.

This means we can translate this VS16MORT.DUSMCPUB file into a dataframe using the following code:

# Import librariesimport pandas as pd

# Define column widths and names based on the document layoutcolumns = pd.DataFrame({ "widths": [ #clump 1 19, 1, 40, 2, 1, 1, 2, 2, 1, 4, 1, 2, 2, 2, 2, 1, 1, 1, 16, 4, 1, 1, 1, 1, 34, 1, 1, 4, 3, 1, 3, 3, 2, 1, 2,

#clump 2 6, 1, 6, 1, 6, 1, 6, 1, 6, 1, 6, 1, 6, 1, 6, 1, 6, 1, 6, 1, 6, 1, 6, 1, 6, 1, 6, 1, 6, 1, 6, 1, 6, 1, 6, 1, 6, 1, 6, 1,

#clump 3 36, 2, 1,

#clump 4 4, 1, 4, 1, 4, 1, 4, 1, 4, 1, 4, 1, 4, 1, 4, 1, 4, 1, 4, 1, 4, 1, 4, 1, 4, 1, 4, 1, 4, 1, 4, 1, 4, 1, 4, 1, 4, 1, 4, 1,

#clump 5 1, 2, 1, 1, 1, 1, 33, 3, 1, 1 ], "names": [ #clump 1 "reserved_positions1", "resident_status", "reserved_positions2", "education_1989", "education_2003", "education_flag", "month_of_death", "reserved_positions3", "sex", "detail_age", "age_sub_flag", "age_recode_52", "age_recode_27", "age_recode_12", "infant_age_recode_22", "place_of_death", "marital_status", "day_of_week_of_death", "reserved_positions4", "current_data_year", "injury_at_work", "manner_of_death", "method_of_disposition", "autopsy", "reserved_positions5", "activity_code", "place_of_causal_injury", "icd10", "358_cause_recode", "reserved_positions6", "113_cause_recode", "130_infant_cause_recode", "39_cause_recode", "reserved_positions7", "num_entity_axis_conditions",

#clump 2 (ICD coded conditions from the death certificate listed in order of appearance) "cert_dx1", "reserved_positions8", "cert_dx2", "reserved_positions9", "cert_dx3", "reserved_positions10", "cert_dx4", "reserved_positions11", "cert_dx5", "reserved_positions12", "cert_dx6", "reserved_positions13", "cert_dx7", "reserved_positions14", "cert_dx8", "reserved_positions15", "cert_dx9", "reserved_positions16", "cert_dx10", "reserved_positions17", "cert_dx11", "reserved_positions18", "cert_dx12", "reserved_positions19", "cert_dx13", "reserved_positions20", "cert_dx14", "reserved_positions21", "cert_dx15", "reserved_positions22", "cert_dx16", "reserved_positions23", "cert_dx17", "reserved_positions24", "cert_dx18", "reserved_positions25", "cert_dx19", "reserved_positions26", "cert_dx20", "reserved_positions27",

#clump 3 "reserved_positions28", "num_record_axis_conditions", "reserved_positions29",

#clump 4 (ICD coded conditions from the death certificate normalized using TRANSAX) "dx1", "reserved_positions30", "dx2", "reserved_positions31", "dx3", "reserved_positions32", "dx4", "reserved_positions33", "dx5", "reserved_positions34", "dx6", "reserved_positions35", "dx7", "reserved_positions36", "dx8", "reserved_positions37", "dx9", "reserved_positions38", "dx10", "reserved_positions39", "dx11", "reserved_positions40", "dx12", "reserved_positions41", "dx13", "reserved_positions42", "dx14", "reserved_positions43", "dx15", "reserved_positions44", "dx16", "reserved_positions45", "dx17", "reserved_positions46", "dx18", "reserved_positions47", "dx19", "reserved_positions48", "dx20", "reserved_positions49",

#clump 5 "reserved_positions50", "race", "bridged_race_flag", "race_imp_flag", "race_recode_3", "race_recode_5", "reserved_positions51", "hispanic_origin", "reserved_positions52", "hispanic_origin_race_recode" ]})

# Read in the fixed-width file using the column widths and assign column namesfile_path = "D:/d4ph/nchs-death/VS16MORT.DUSMCPUB"

# Load the datamort2016 = pd.read_fwf(file_path, widths=columns["widths"], names=columns["names"])

# Remove unnecessary columns beginning with "reserved_positions"mort2016_cleaned = mort2016.loc[:, ~mort2016.columns.str.startswith("reserved_positions")]

I put the widths and names into clumps to make it easier for you to follow the code and compare it against the documentation. The Python library pandas has a function that uses these arrays to import a field width file (fwf) called read_fwf. Finally, I removed all of the "reserved_positions" placeholders.

Another way to handle clumps 2 and 4 would have been to store each entity/record axis code as its own string and then trim the trailing space at the end of each code (the 7th character of the axis entity values in clump 2, and the 5th character of each record axis value in clump 4). I thought this incremented "reserved_positions" approach might make more sense to some students, so that's what you see in the above code.

And we have our 2016 file ready to go.

2017-2022

Repeating the same process, additional dataframes were generated for the 2017 to 2022 data. A few notes during this process:

- 2017 data conform to the same fixed widths and variable encoding structure (Yay!)

- In 2018, a 40-level imputed race variable called "Race Recode 40" was added

- In 2020, several decedent occupation and industry variables were added

- In 2021, a new variable was added to delineate residents from nonresidents. Also, education_1989 and a few race classification/recode variables were removed

- In 2022, the reserved positions between hispanic_origin_race_recode and race_recode_40 (just prior to the final occupational data) were removed

HERE is the Jupyter Notebook file to convert all of THESE Mortality Multiple Cause Files (2016-2022) into workable dataframes and export them to .csv files.

Great. So now what?

The next step is to handle variable encoding. Each variable in these dataframes uses a number to represent some value, instead of listing a whole text string. So, for instance, looking at our education_2003 variable ("Education" in the 2022 documentation, reflecting the 2003 revision), the number 6 would indicate that decedent had completed a Bachelor's degree, whereas a 2 would reflect some high school but no diploma.

Before we explore a use case for these data, a few things should be said of race/ethnicity variables in general, purely from an epidemiological perspective. The vast oversimplification of human genetic diversity into discrete categories is extraordinarily academic. As the 2022 documentation puts it: single-race data are not comparable with bridged-race data, and Race Recode 6 data in 2022 are not comparable with data using Race Recode 5 for earlier years.

Here are the "race and Hispanic origin" variables mapped (provided or imputed) for decedents in 2022:

Race Recode 6 : based on single race which is consistent with 1997 OMB race standards, replacing "Race Recode 5" which was based on bridged race consistent with 1977 OMB standards.

Hispanic Origin : Delineates between Spaniard, Mexican, Central American, South American, Latin American, Puerto Rican, Cuban, Dominican, and Other Hispanic

Hispanic Origin/Race Recode : New to 2022, this is an amalgamation of Hispanic origin with single race consistent with 1997 OMB race standards.

Race Recode 40 : Available since 2018, provides single and multiple-race combinations reflecting 1997 OMB race-reporting guidelines, though not directly comparable with previous versions.

It gets even messier when attempting to compare between years, bridge with other data sets, or generalize observed mortality variability patterns to actual living populations (without reference to specific genetic differences).

But I digress. Let's look at some of these data.

Code (on GitHub) <- includes data prep

Deployment

The "Unique Categories" numbers quantify how many unique combinations of the 4 included race/ethnicity variables exist in the data (i.e., Race Recode 6, Race Recode 40, Hispanic Origin, and Hispanic Origin/Race Recode). In addition to exemplifying how a basic Dash app can visualize data, these data indicate how dubiously slippery it can get to classify the messiness of actual genetic diversity into the neat, sterile categories pre-packaged for our consumption by politically-minded sophists.

Perhaps, in the future, more detail on the so-called "White race" might find its way into datasets such as NCHS mortality data, but for now, the 104 groups (e.g., Russian, Lithuanian, Irish, Germanic, English, Libyan, Syrian, Pennsylvania Dutch, Australian, Egyptian, Jewish, Italian, etc.) identified in a separate U.S. Census table from 2020 might only be used to justify carving out a new "non-White" group or two in the likely-next authoritative declaration on how we are all supposed to separate our single, human race into proper, government-approved partitions more amenable to the in-group/out-group zeitgeist animating post-modern pundits of substitutes for functional culture.

The next step in meaningfully tackling these mortality data is to understand how proximate causes of death are simplified into recoded variables based on ICD10 codes. For those unfamiliar, ICD (International Classification of Diseases) codes represent nested hierarchies of patient diagnoses, with each successive character within a code adding additional detail to the classification. The "10" in ICD10 points to it being the 10th edition, with each successive edition fixing issues and generally adding more specificity.

For example, in the ICD10 framework, M00-M99 pertain to diseases of the musculoskeletal system and connective tissue (at the time of this writing, containing ~8.6k separate codes). Conditions of osteoarthritis, for instance, are represented by codes M15 to M19, with M17 signifying osteoarthritis of the knee, M17.3 unilateral post-traumatic osteoarthritis of a knee, and M17.32 unilateral post-traumatic osteoarthritis of the left knee. You can explore this hierarchy of ICD10 disease classification in more detail HERE.

To make mortality analyses more manageable, these myriad codes have been recoded, placed into simpler, more generalized categories. There are 4 mortality cause recodes, 1 of which pertains only to infants. The 3 overlapping all-ages "cause of death" recodes we have in our mortality records are 39_cause_recode, 113_cause_recode, and 358_cause_recode, and in this step we will map them together alongside primary ICD cause of death (icd10 in our prepped dataframes).

Right now, these recodes are represented as numbers, each of which represents a class of ICD10 codes, with decreasing resolution moving from 358 categories to 113 to 39. For instance, looking at the associated data dictionary for 2022 (HERE), we see overlaps in codes such as the following for I00 to I09.

358_cause_recode:

113_cause_recode:

39_cause_recode:

We will map the names associated with each code after confirming that this nesting pattern appears consistent throughout the recode schema. To confirm this, I built a quick Dash app to explore how these variables fit together (screenshot and link to notebook below).

Selecting various icd10, 358_cause_recode, 113_cause_recode, and 39_cause_recode values returns corresponding values from the other columns, followed by a Sankey diagram that shows the expected decline in resolution across those 4 variables. Here is the heart disease Sankey aligning to the above (39_cause_recode == 22):

This diagram shows how a cacophony of icd10 codes (left) flows into a single, simple 39-category recode (right). Importantly, as we move rightward towards decreasing granularity, no node splits into more than 1 lower-resolution category. Thus, we have confirmed it feasible to leverage a single hierarchy in our explorations of these data, nested from biggest to smallest category:

39_cause_recode -> 113_cause_recode -> 358_cause_recode -> icd10

I will use an animated Sunburst chart to show 2022 mortality by each of these 4 levels of granularity and incorporate names for our 39, 113, and 358 cause recode schema. This is a helpful step because ICD10 codes are a big deal in healthcare contexts; since these recodes are consistent across years, mapping them will enable several future mortality analyses. Also, animated Sunburst Charts are awesome. ☺️

For the animated Sunburst chart, I will leverage the fantastic work of data sculptor Vasco Asturiano (https://github.com/vasturiano/sunburst-chart) and put the 2022 data into a zoomable sunburst chart. To do this, I need to convert my dataframe into the requisite .json file for this visualization.

HERE is the Jupyter Notebook I used to: 1) build the above Sankey Dash app, 2) map recode numbers to named causes of death, 3) export total deaths by causes for 2016-2022, and 4) convert 2022 data into a .json with cause of death names instead of numbers for each recode level, for the d3 Sunburst viz.

CLICK HERE TO EXPLORE THE ANIMATED SUNBURST VIZ OF 2022 U.S. MORTALITY DATA

Now that we've dealt with recode cross-mapping, and had some fun with d3.js, let's prepare a multi-year dataset with features of interest to use in future analyses. Specifically, I would like to be able to compare distributions of mortality by age group, sex, education, place of death, manner of death (e.g., natural, suicide, homicide), and recoded proximate causes of death (i.e., the above recodes) across multiple years. To remain both practical and empirical, I will for now ignore the race/ethnicity artifice and consider only the 40-category race recoding. Notwithstanding inherent insufficiencies of conflated and capricious changes to these categorical delineations (divorced from nationality), this 40-category variable (called, in the above prep, race_recode_40) is available in every year of our data since 2018. So, we'll drop 2016 and 2017 for this step. ¯\_(ツ)_/¯

Here is my variable selection in the order they are listed within the 2018, 2019, 2020, 2021, and 2022 NCHS Multiple Cause mortality data documentation:

- Education (by our preparation, education_2003)

- Sex (sex)

- Age (selected age_recode_27)

- Place of Death and Decedent's Status (place_of_death)

- Manner of Death (manner_of_death)

- Cause of Death (39_cause_recode, 113_cause_recode, and 358_cause_recode)

- Race/ethnicity (race_recode_40)

Concatenating 2018-2022 results in a single dataframe of 15,859,795 death records in the U.S. from 2018 to 2022, all of which include our selected variables.

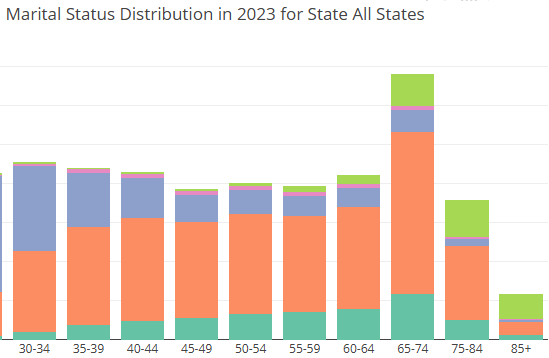

As part of my initial exploratory data analysis of this giant NCHS U.S. mortality file, I built a Dash app to demonstrate how intermediate visualizations can make data easier to explore for programmers working on projects. I mapped encoding values onto the hover-overs to make each viz easier to understand, and added in some axis controls. The purpose of this viz is to explore the dataset as a data scientist, so I'm not going to spend a lot of time polishing it, but here is a quick walkthrough.

There are 4 visualizations controlled by the top global filters:

You can select a value from the 39, 113, or 358 cause recodes and also toggle on/off male and female records.

At the top-left is a line graph showing deaths by sex and year for the selected cause (in this case, "Malnutrition" from the 113 Cause Recode). The top-right shows deaths for the selected year (2022) on a scatter/jitter plot, with Age Recode 27 and Race Recode 40 selected for my axes. The hover-over box you see within the top-right viz shows the raw values for race_recode_40 and age_recode_27 from our data, but also the description (desc) of that value for the selected variable, such as "Samoan" and "75-79 years old".

The first of the lower two visualizations is a stacked bar-chart at the at the lower left, showing the distribution of deaths for the selected cause recode by year and age group. While this tile defaults to "All Years" (2018-2022), I selected 2022 and hovered over one segment, spawning a hover-over box telling us that 1,375 men 85-89 years of age died of malnutrition in the United States.

The fourth and final viz is a horizontal stacked bar chart with a toggle to show either where people were when they died or the manner of their death (e.g., natural, suicide, homicide, etc.). In the below screenshot for malnutrition, hovering over the orange segment of Place of Death 4 shows interpretable detail, that 5,520 women died in 2022 of malnutrition while at their homes.

The entire Jupyter Notebook is available on GitHub and the Dash App visualizer is available for your exploration through the following links:

Code (on GitHub) <- includes data concatenation and the Dash app

NOTE: At the top of the Dash app code, you'll notice after loading the cause recode maps from .csv files, I created mappings for race, age, education, manner of death, and place of death. Then, I could reference them in each visualization's dataframe. The general layout of Dash apps is covered in THIS great article, and you can find some sophisticated examples HERE, but consider the code in blocks. First, below the variable maps, you'll find an app layout where the global filters and visualization locations (2 in the top row & 2 in the bottom row). Following this layout, each visualization is built in turn, beginning with @app.callback before specifying outputs and inputs. Take your time and, if you have access to an LLM, one great way to better understand the code is to copy-paste a section into it and ask for a detailed explanation.

For those interested in building visualizations such as these professionally, keep in mind that additional refinements to this approach would likely be necessary for laypersons to more easily navigate it, such as a single year selector affecting all tiles. The explicit purpose of this dashboard is to help data scientists with exploratory data analysis prior to conducting statistical tests and building AI/ML models.