- by Jimmy Fisher

- Oct 19, 2024

Logistic Regression

- By Jimmy Fisher

- Oct 19, 2024

- in Techniques

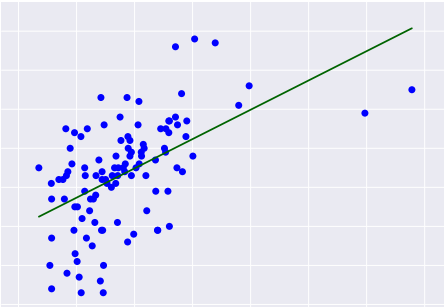

Logistic regression is a crucial technique in data science and statistics, primarily used for binary classification tasks. This method predicts the probability of a binary outcome based on one or more predictor variables, making it a widely used tool in various industries such as finance and healthcare. The essence of logistic regression lies in its ability to model the probability of class membership using the logistic function, ensuring outputs remain between 0 and 1.

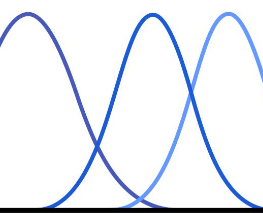

The Logistic Function

The logistic function at the core of this technique is expressed as:

Let's look at this equation's parts...

- Core Structure:

WHERE Z = β0 + β1X1 + β2X2 + … + βnXn

- Exponential Component:

- Coefficients and Variables:

: Intercept

β1, β2, …, βn: Coefficients for each variable

X1 , X2 , … , Xn: Independent variables

WHERE Z = β0 + β1X1 + β2X2 + … + βnXn

: Intercept

β1, β2, …, βn: Coefficients for each variable

X1 , X2 , … , Xn: Independent variables

In practice, this model equation allows calculation of the probability of a binary outcome based on the combined weighted influence of each included independent variable holding all other predictors constant.

Example in Python

This example demonstrates how scikit-learn can simplify the creation and evaluation of a logistic regression model, delivering quick insights into dataset outcomes.

Example in R

This code is the rough equivalent to the Python code above. One useful thing to know is that Large Language Models (LLMs) such as ChatGPT can help you code and even explain code to you that you find online. So, for example, with this R script, copy-pasting it into OpenAI's 4o model and asking for an explanation yields the following:

This code implements a logistic regression model in R to predict whether a person has diabetes based on age, BMI, and blood pressure. Here’s a step-by-step explanation:

Load Libraries:

readr: Used for reading CSV files.caret: Provides functions for splitting data into training and testing sets, as well as calculating model accuracy.dplyr: Used for data manipulation (though it’s not actively used in this code).

Load the Dataset:

- The dataset is loaded from a file called

diabetes.csvusingread_csvand assigned to the variabledata.

- The dataset is loaded from a file called

Define Features and Target:

- The features (predictor variables) are selected from the columns

Age,BMI, andBloodPressure. - The target variable (

y), which represents whether a person has diabetes, is extracted from the columnDiabetes.

- The features (predictor variables) are selected from the columns

Combine Features and Target:

cbindcombines the feature matrixXand target variableyinto a single data frame calleddatasetfor easier handling in thecaretfunctions.

Train-Test Split:

createDataPartitionsplitsdatasetinto training (70%) and testing (30%) sets.set.seed(42)ensures reproducibility so that the same data split is achieved every time the code runs.

Train the Logistic Regression Model:

glmtrains a logistic regression model usingAge,BMI, andBloodPressureas predictors forDiabetes. Thefamily = binomialparameter specifies logistic regression.

Prediction and Evaluation:

predictgenerates predictions on the test data (test_data). Settingtype = "response"returns probabilities.ifelseconverts these probabilities into binary predictions (1 for diabetes, 0 for no diabetes), based on a threshold of 0.5.- The

accuracyof the model is calculated by comparing predictions (y_pred_class) with actual outcomes in the test set. - Finally,

catdisplays the model’s accuracy as a percentage.

In summary, the code reads a dataset, splits it, trains a logistic regression model on a subset of the data, makes predictions, and evaluates the model’s accuracy.

Pretty good output from my simple prompt of "Explain this code!"

Logistic regression is extraordinarily useful for binary classification, where the outcome variable has two possible values (e.g., yes/no, 0/1). It is valued for its simplicity and interpretability, as it provides coefficients that describe the contribution of each predictor variable to the log odds of the outcome. Data scientists can leverage this method to create predictive models that quantify how each variable influences the probability of a specific outcome. Logistic regression builds on concepts from linear regression but is adapted for classification by modeling the log odds of an outcome’s probability rather than the outcome itself.