- by Jimmy Fisher

- Oct 19, 2024

Principal Component Analysis (PCA)

- By Jimmy Fisher

- Oct 19, 2024

- in Techniques

It was a long day at work, so I asked my supervisor LLM to direct the specialized 7 AI-agent network I programmed last weekend and write this post for me. This is what it came up with.

Understanding Principal Component Analysis (PCA): A Comprehensive Guide

In today's fast-paced data-driven world, managing vast datasets can be daunting. Principal Component Analysis (PCA) serves as a powerful tool to simplify such datasets without losing critical information. As a dimensionality reduction technique, PCA helps emphasize variations and unveil hidden patterns within high-dimensional data. This guide takes you through a deeper understanding of PCA, with practical Python and R code examples, and shares insights on assessing PCA model performance.

What is Principal Component Analysis (PCA)?

Principal Component Analysis (PCA) is a statistical technique that transforms data into a set of linearly uncorrelated variables called principal components. The key objective of PCA is to reduce the dataset's dimensionality while retaining most of its variability. Each component signifies a direction of maximal variance, with components being orthogonal, ensuring no redundancy. Hence, PCA captures the essence of the data, simplifying analysis and visualization.

Why Use PCA?

- Dimensionality Reduction: Essential for cutting down the number of variables in large datasets.

- Noise Reduction: Focuses on principal components to filter out noise.

- Visualization: Transforms complex data, making it easier to visualize in 2D or 3D spaces.

- Feature Extraction: Highlights significant features for machine learning models.

How is PCA Performed?

- Standardize the Data: PCA is scale-sensitive, thus standardizing variables is crucial.

- Calculate Covariance Matrix: Helps understand variable relationships within the dataset.

- Compute Eigenvectors and Eigenvalues: Identifies principal components, with eigenvectors defining directions and eigenvalues measuring variance.

- Sort Eigenvectors: Ranking is done based on descending eigenvalues to prioritize components.

- Transform Data: Maps original data into this new feature space.

Practical Implementation of PCA

Below, we explore PCA's application using both Python and R.

PCA in Python

Code Explanation (Python)

- Import

Libraries:

- numpy:

Used for numerical computations.

- pandas:

Used for data manipulation and analysis.

- sklearn.preprocessing.StandardScaler:

Scales features to standardize data for PCA.

- sklearn.decomposition.PCA:

Performs Principal Component Analysis.

- matplotlib.pyplot:

Used for plotting the PCA results.

- Load

the Dataset:

- The

dataset is read into a DataFrame. Replace 'your_dataset.csv' with the

actual file path.

- Feature

Selection:

- Selects

specific features from the dataset to apply PCA. Ensure that all feature

names are correctly spelled, and ['feature3'] is corrected to 'feature3'.

- Standardization:

- Standardizes

features to have zero mean and unit variance. This is crucial for PCA

since it is sensitive to the scale of input data.

- PCA

Transformation:

- The PCA

object is configured to reduce dimensions to 2 principal components.

- fit_transform

computes the principal components and reduces the data's dimensionality.

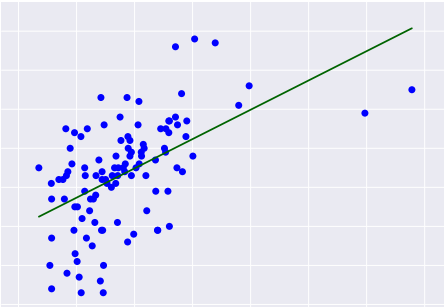

- Visualisation:

- The

results are visualized using a scatter plot where the two principal

components are plotted against each other.

- Performance

Assessment:

- explained_variance_ratio_

gives the proportion of the dataset's variance captured by each principal

component.

- Output:

- The

scatter plot shows the distribution of the data in reduced dimensions.

- The

explained variance ratio helps interpret how much of the data's

variability is captured by the selected components.

PCA in R

Code Explanation (R)

- Load

Libraries:

- ggplot2:

Used for visualization (can be extended if needed for PCA plotting).

- data.table:

Provides fast and efficient data reading and manipulation.

- caret:

Used for preprocessing (e.g., scaling features).

- Load

Dataset:

- The

dataset is read using fread() for efficient loading. Replace 'your_dataset.csv'

with your actual file path.

- Feature

Selection:

- A

vector of column names (features) specifies the dataset features to be

used for PCA.

- Standardization:

- The scale()

function standardizes the selected features to have a zero mean and unit

variance, ensuring PCA is not influenced by feature scaling differences.

- PCA

Computation:

- prcomp()

performs Principal Component Analysis. The center = TRUE and scale. =

TRUE arguments ensure that the data is centered and scaled as part of the

PCA process.

- Visualization:

- biplot()

generates a biplot that shows both the principal components' scores (data

points in reduced dimensions) and loadings (original variables'

contributions to the components).

- Performance

Assessment:

- The

explained variance ratio for each principal component is extracted from

the summary() of the PCA result. It shows how much variance is captured

by each component.

- Output:

- The

biplot provides a visual representation of the data in reduced

dimensions.

- The

explained variance ratios give insights into the effectiveness of

dimensionality reduction and how well the selected principal components

capture the data's variability.

Assessing PCA Model Performance

The explained variance is the primary metric for evaluating PCA performance. It indicates the portion of the dataset's total variance captured by each principal component. A robust PCA model will encapsulate a significant variance percentage with fewer components, streamlining dimensionality reduction efforts. Evaluating the explained variance assists in deciding the number of components to retain for effective data representation.

Huh... it did pretty well, I think, and it says that it is "SEO Optimized," which is good, I guess, but I'll add a few closing thoughts.

PCA is a kind of unsupervised learning technique, meaning that you do not specify predictor and outcome variables. Instead, you feed in a bunch of data, and the PCA algorithm finds similar groups that are maximally different from the other groups. It kind of clumps combinations of variables together and treats each clump as a separate varaible.

Imagine, for example, a bowl of mixed fruit -- blueberries, strawberries, orange slices, apples, and grapes, all mixed together. You hand it over to PCA and it seeks to group the chaotic, sticky mess into piles that maximize differences between groups. In this situation, PCA would come up with 5 groups: one pile of blueberries, another of strawberries, and so on for all the types of fruit. The algorithm wouldn't, however, name them. It would just notice that those similar groups were most different from the rest.

In practice, the data groups have to be looked at and named by the data scientist (sometimes in conjunction with so-called "subject matter experts"), a task usually less straightforward as a bowl of fruit. Check out my PCA data projects for some practical applications, and consider looking through the following links for more detail:

- https://www.geeksforgeeks.org/principal-component-analysis-pca/

- https://www.nature.com/articles/nmeth.4346

- https://towardsdatascience.com/principal-component-analysis-made-easy-a-step-by-step-tutorial-184f295e97fe

- https://www.youtube.com/watch?v=ZgyY3JuGQY8

- https://www.youtube.com/watch?v=dhK8nbtii6I

As with most every other skill, the way to get better at it is to do it, over and over again.