- by Jimmy Fisher

- Oct 19, 2024

Random Forest Models (RFM)

- By Jimmy Fisher

- Oct 19, 2024

- in Techniques

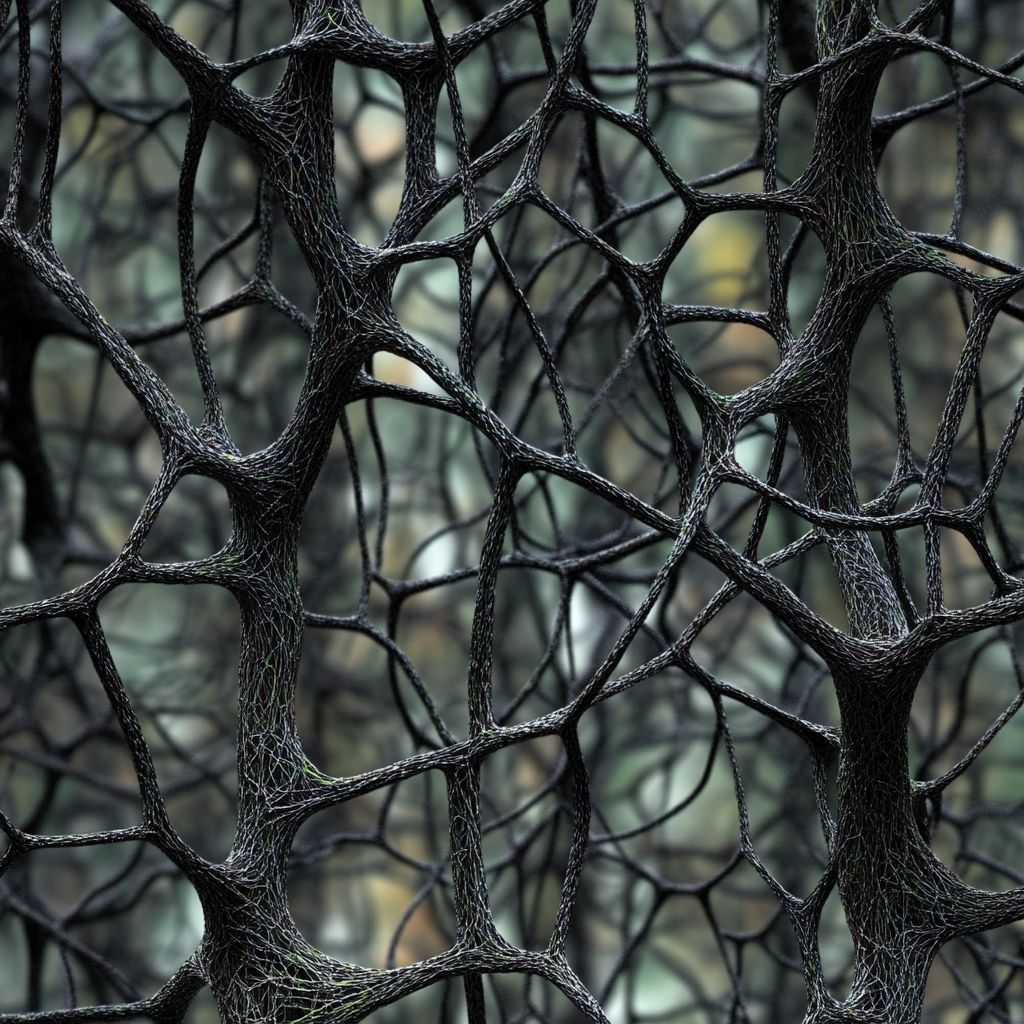

Random Forest Models (RFMs) have become a staple in the data science toolkit, praised for their flexibility and interpretability across diverse applications. They are a form of ensemble learning in which multiple decision trees are constructed and aggregated to improve predictive performance. Each tree in the "forest" is trained on a randomly selected subset of the data and a random subset of features, which reduces overfitting and enhances generalization. For classification, the final prediction is the mode of individual trees' predictions, while for regression, it is the mean prediction. Additionally, random forests enable feature importance ranking, aiding feature selection and offering valuable insights into the data’s characteristics.

Python Code Examples

RFM Classification with Categorical Response Variable

In this example, we load the Iris dataset, split it into training and testing sets, and then train a random forest classifier. The model's performance is evaluated using a classification report and accuracy score.

The Iris dataset is widely-used dataset in machine learning and statistics, primarily utilized for classification tasks. It is one of the best-known databases for pattern recognition and is often used to test classification algorithms. The dataset contains measurements of iris flowers from three different species, with the goal of classifying a flower based on its features.

from sklearn.datasets import load_irisfrom sklearn.model_selection import train_test_splitfrom sklearn.ensemble import RandomForestClassifierfrom sklearn.metrics import classification_report, accuracy_score

# Load the Iris datasetiris = load_iris() # Load the dataset from sklearnX, y = iris.data, iris.target # Separate features (X) and target (y)

# Split the data into training and testing setsX_train, X_test, y_train, y_test = train_test_split( X, y, test_size=0.3, random_state=42) # 70% for training, 30% for testing

# Initialize and train a Random Forest modelrf_classifier = RandomForestClassifier( n_estimators=100, random_state=42) # Use 100 trees in the forestrf_classifier.fit(X_train, y_train) # Train the model with the training data

# Make predictions and evaluate performancey_pred = rf_classifier.predict(X_test) # Predict labels for the test setprint("Classification Report:\n", classification_report(y_test, y_pred)) # Detailed reportprint("Accuracy Score:", accuracy_score(y_test, y_pred)) # Overall accuracy

from sklearn.datasets import load_iris

from sklearn.model_selection import train_test_split

from sklearn.ensemble import RandomForestClassifier

from sklearn.metrics import classification_report, accuracy_score

# Load the Iris dataset

iris = load_iris() # Load the dataset from sklearn

X, y = iris.data, iris.target # Separate features (X) and target (y)

# Split the data into training and testing sets

X_train, X_test, y_train, y_test = train_test_split(

X, y, test_size=0.3, random_state=42

) # 70% for training, 30% for testing

# Initialize and train a Random Forest model

rf_classifier = RandomForestClassifier(

n_estimators=100, random_state=42

) # Use 100 trees in the forest

rf_classifier.fit(X_train, y_train) # Train the model with the training data

# Make predictions and evaluate performance

y_pred = rf_classifier.predict(X_test) # Predict labels for the test set

print("Classification Report:\n", classification_report(y_test, y_pred)) # Detailed report

print("Accuracy Score:", accuracy_score(y_test, y_pred)) # Overall accuracy

For Classification RFMs:

- Accuracy:

Proportion of correctly classified instances.

- Precision,

Recall, F1-score: Offer detailed evaluations for each class.

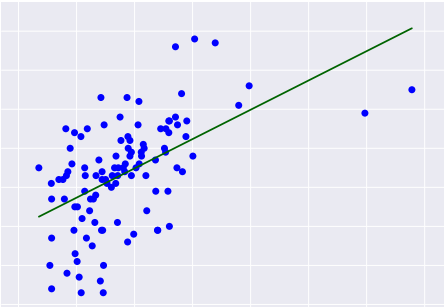

RFM Classification with Continuous Response Variable

In this example, we use the Boston Housing dataset is a classic dataset in machine learning and statistics, often used to demonstrate regression techniques. It contains information collected by the U.S. Census Service concerning housing in the area of Boston, Massachusetts. This dataset is primarily used to predict housing prices based on various features of the neighborhood.

from sklearn.datasets import load_bostonfrom sklearn.model_selection import train_test_splitfrom sklearn.ensemble import RandomForestRegressorfrom sklearn.metrics import mean_squared_error, r2_score

# Load the Boston housing datasetboston = load_boston() # Load dataset from sklearnX, y = boston.data, boston.target # Separate features (X) and target (y)

# Split the data into training and testing setsX_train, X_test, y_train, y_test = train_test_split( X, y, test_size=0.3, random_state=42) # 70% for training, 30% for testing

# Initialize and train a Random Forest Regressorrf_regressor = RandomForestRegressor( n_estimators=100, random_state=42) # Use 100 trees in the forestrf_regressor.fit(X_train, y_train) # Train the model on the training data

# Make predictions and evaluate performancey_pred = rf_regressor.predict(X_test) # Predict target values for the test setprint("Mean Squared Error:", mean_squared_error(y_test, y_pred)) # Evaluate MSEprint("R^2 Score:", r2_score(y_test, y_pred)) # Evaluate R^2 score

from sklearn.datasets import load_boston

from sklearn.model_selection import train_test_split

from sklearn.ensemble import RandomForestRegressor

from sklearn.metrics import mean_squared_error, r2_score

# Load the Boston housing dataset

boston = load_boston() # Load dataset from sklearn

X, y = boston.data, boston.target # Separate features (X) and target (y)

# Split the data into training and testing sets

X_train, X_test, y_train, y_test = train_test_split(

X, y, test_size=0.3, random_state=42

) # 70% for training, 30% for testing

# Initialize and train a Random Forest Regressor

rf_regressor = RandomForestRegressor(

n_estimators=100, random_state=42

) # Use 100 trees in the forest

rf_regressor.fit(X_train, y_train) # Train the model on the training data

# Make predictions and evaluate performance

y_pred = rf_regressor.predict(X_test) # Predict target values for the test set

print("Mean Squared Error:", mean_squared_error(y_test, y_pred)) # Evaluate MSE

print("R^2 Score:", r2_score(y_test, y_pred)) # Evaluate R^2 score

For Regression RFMs:

- Mean

Squared Error (MSE): The average of squared differences between

predictions and actual observations.

- R²

Score: Indicates the proportion of variance explained by the model.

R Code Examples

RFM Classification with Categorical Response Variable (with Iris dataset)

# Load necessary libraries

library(datasets) # Contains the Iris dataset

library(caret) # For data partitioning and performance evaluation

library(randomForest) # For building the Random Forest model

# Load the Iris dataset

data(iris) # Load the dataset

X <- iris[, -5] # Extract features (first 4 columns)

y <- iris[, 5] # Extract the target variable (species)

# Split the data into training and testing sets

set.seed(42) # Set seed for reproducibility

trainIndex <- createDataPartition(y, p = 0.7, list = FALSE) # 70% training data

X_train <- X[trainIndex, ] # Training features

X_test <- X[-trainIndex, ] # Testing features

y_train <- y[trainIndex] # Training target

y_test <- y[-trainIndex] # Testing target

# Initialize and train the Random Forest model

rf_classifier <- randomForest(

x = X_train,

y = y_train,

ntree = 100, # Number of trees in the forest

mtry = 2, # Number of variables to consider at each split

importance = TRUE, # Compute variable importance

seed = 42 # Set seed for reproducibility

)

# Make predictions and evaluate performance

y_pred <- predict(rf_classifier, X_test) # Predict target values for test data

conf_matrix <- confusionMatrix(y_pred, y_test) # Create a confusion matrix

# Output results

print(conf_matrix) # Print detailed confusion matrix and metrics

print(paste("Accuracy Score:", conf_matrix$overall["Accuracy"])) # Print accuracy

RFM Classification with Continuous Response Variable

# Load necessary libraries

library(MASS) # Contains the Boston dataset

library(caret) # For train-test splitting and evaluation

library(randomForest) # For building the Random Forest model

# Load the Boston dataset

data("Boston") # Load dataset from MASS package

X <- Boston[, -14] # Extract features (all columns except the last)

y <- Boston[, 14] # Extract target variable (medv - Median value of owner-occupied homes)

# Split the data into training and testing sets

set.seed(42) # Set seed for reproducibility

trainIndex <- createDataPartition(y, p = 0.7, list = FALSE) # 70% training data

X_train <- X[trainIndex, ] # Training features

X_test <- X[-trainIndex, ] # Testing features

y_train <- y[trainIndex] # Training target

y_test <- y[-trainIndex] # Testing target

# Initialize and train the Random Forest Regressor

rf_regressor <- randomForest(

x = X_train,

y = y_train,

ntree = 100, # Number of trees in the forest

mtry = 4, # Number of variables randomly sampled as candidates at each split

seed = 42 # Set seed for reproducibility

)

# Make predictions and evaluate performance

y_pred <- predict(rf_regressor, X_test) # Predict target values for test data

# Compute evaluation metrics

mse <- mean((y_test - y_pred)^2) # Mean Squared Error

r2 <- 1 - (sum((y_test - y_pred)^2) / sum((y_test - mean(y_test))^2)) # R-squared score

# Output the results

print(paste("Mean Squared Error:", round(mse, 2))) # Print Mean Squared Error

print(paste("R^2 Score:", round(r2, 2))) # Print R-squared score