- by Jimmy Fisher

- Oct 19, 2024

Data Dimensionality Reduction

- By Jimmy Fisher

- Oct 19, 2024

- in Techniques

Data dimensionality reduction in AI refers to the process of reducing the number of input variables or features in a dataset while retaining as much relevant information as possible for creating an efficient model that can be used in various machine learning algorithms. This is done because high-dimensional data may result in over-fitting, increased training times and computational costs.

There are several different approaches to accomplishing this, such as:

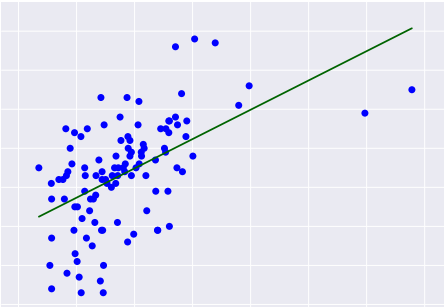

- Principal Component Analysis (PCA) is an orthogonal transformation technique that reduces the dimensions of data by retaining the maximum variance of each feature while removing the other redundant ones.

- Non-negative Matrix Factorization decomposes a matrix into two lower-dimensional non-negative factors, allowing for easier manipulation and processing of large datasets.

- Feature selection involves choosing only the most informative features to use in your model by calculating the correlation between different variables.

- Autoencoders are neural networks that can compress input data to a lower dimension space while preserving its structure.

By using these methods, data scientists can retain relevant information while minimizing overfitting, training times, and computation costs. Simpler models are often more interpretable as well. Finally, effective dimensionality reduction makes it easier to build well-performing models that generalize well to new data. It is for these reasons, that you will often see these methods employed as part of the AI/ML pipeline.